Intelligence and compression

2025-05-28 / 20 min read- A musical analogy

- The two parts to compression

- Prediction leads to compression

- Causally dependent environments and time ordering

- Good compressors will leverage causal dependencies

- Compressing the model

- Updating the model

- The AIXI formulation of universal intelligence

- The Free Energy Principle and active inference

What is the relationship between Compression, prediction, learning, understanding, and intelligence?

Compression is a beautifully simple idea that anyone can grasp quickly and intuitively: make data small. It's a well-defined and objective measure. We are often drawn to simple ideas (kind of the point of this essay) and as such compression is frequently used to frame concepts in AI and provide a lens through which to understand them.

A musical analogy #

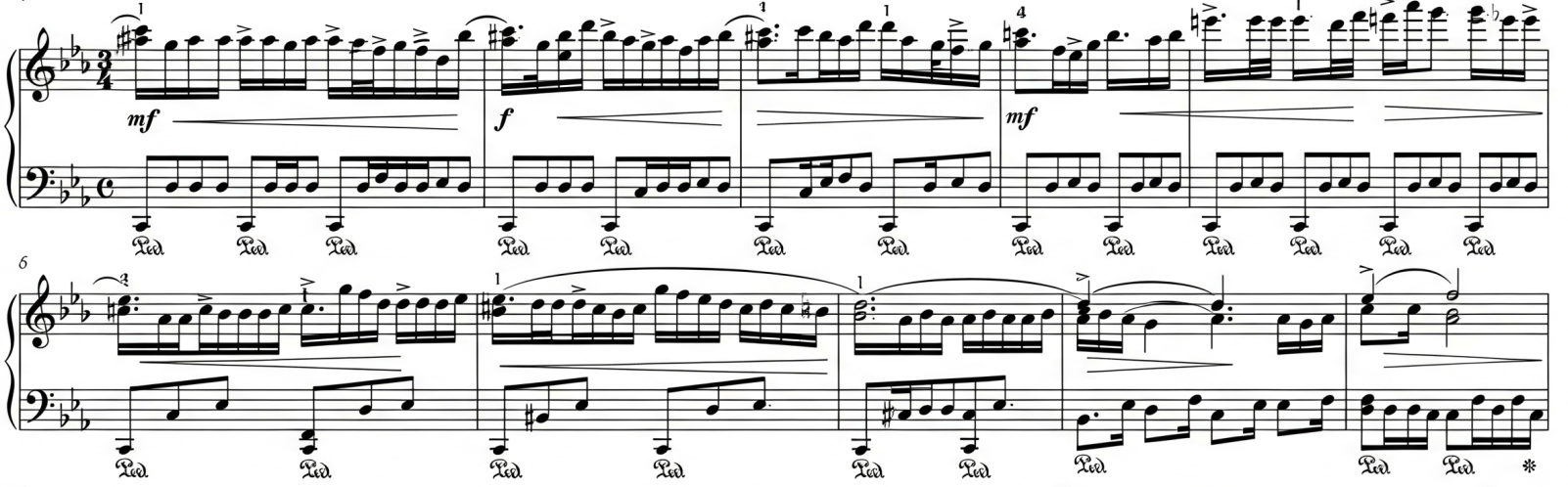

Let's start with a non-technical example. Here's a piece of music, represented as a score:

To the untrained eye, this score contains a tremendous amount of information. If I wanted to remember this, I would need to remember most notes. I can see some patterns, obvious repetitions, but that's about the only structure I can easily extract.

To the trained eye, however, it's a very different story. A musician might immediately recognize the key or scale of parts of this score. While I might see each note as one of roughly twenty possibilities, a musician, having played enough music, knows what typically comes next and can rule out many notes that would sound wrong. They may further recognize patterns I don't see: chords, chord progressions, arpeggios - structures in music that have names. Where I see many individual notes, they might see "an F♯ diminished seventh arpeggio sweeping up three octaves."

So, what is the musician doing that I am not? Many things, but one way to look at this is that they are compressing the data more effectively. They recognize patterns and "chunk" blocks of notes into single, smaller terms related to their prior experience. They can use a symbol or a short code-word to compress what they see and importantly, they do this by relating it back to things they already know - their "prior" knowledge.

If you could meaningfully measure the number of bits required to store a human thought or memory, a musician would need to store far fewer bits than I would to reproduce the score above. In that sense, they have compressed the data better than I have.

The two parts to compression #

From the above anology, we can immediately see there are two parts to the compression story, two forms of data that we might want to compress:

- The current or future stream of data that we experience, in the case above, this particular piece of music

- The prior knowledge we already have, that plays a role in how we interpret and compress future data streams (we will call this the "model" or "prior model")

To formalize this, we could talk about the conditional compression of new sensory data, given what we already know - how well can we compress a dataset given another dataset? We can call the length of the compressed representation

This is a very useful concept, but it doesn't quite capture the full picture of what we care about. We are not just interested in compressing new data given our prior knowledge, we are also interested in compressing our prior knowledge itself (I'll argue more for why this is important later). So, we can also add the compressed size of our prior knowledge as

By breaking it down this way, we can start to draw interesting connections to machine learning, prediction, and ultimately, intelligence, and this breakdown is key to many of the theories discussed further below.

Prediction leads to compression #

Let's return from music to simple strings: sequences of bytes (numbers from 0-255).

Where

Large Language Models (LLMs) are, at their core, next-token predictors. Given a sequence of input tokens, they predict the subsequent token. (I'm not claiming this is their only capability, just that they are at least this.)

LLMs also possess a vast amount of knowledge from prior datasets

Given a good predictor of tokens (which we can effectively consider as bytes, as discussed above), it is fairly straightforward to turn that predictor into a good compressor. Instead of storing each byte as is (typically 8 bits), we can run our predictor. For each subsequent byte, the predictor produces a probability distribution over all 256 possible byte values, indicating how likely each is to be the next one.

Here's a simple variable-length encoding scheme [1] (related to unary encoding) which, while not highly efficient in general, highlights the point: the better our predictions, the fewer bits we need to encode new data. (For better practical approaches, look into Huffman coding, used by compressors like gzip, or Arithmetic encoding.)

After running our predictor for the next token and obtaining its probability distribution, instead of storing the actual byte (8 bits), we can order all predicted values by their probability (most likely first, at index 0, second most likely at index 1, etc.). We then store the index of the actual byte in this ordered list. Indexes can be encoded as follows:

| Index | Encoded Value |

|---|---|

| 0 | 10 |

| 1 | 110 |

| 2 | 1110 |

| 3 | 11110 |

This is a variable-length encoding. If our predictor is perfect, the actual next byte is always at index 0. Our data stream then becomes a long sequence of:

101010101010101010101010...

That's 2 bits per byte (or if further compressed, significantly less), reducing the size of our dataset by a factor of at least 4 (from the original 8 bits per byte)!

Hopefully then, it is clear that a good predictor can be transformed into a good compressor. In this sense, prediction implies compression, at least when we talk about the conditional part of compression,

Causally dependent environments and time ordering #

If we are to take the compression analogy further toward human-like intelligence, we must consider the laws of the physical world in which we operate, namely that we are bound by time.

For any agent interacting with an environment, at every single step, action, or moment, there are things that have happened and things that will happen. Crucially, we can only use the past to predict the future.

With a concept like

For example, imagine our dataset

How do we resolve this? we can consider our dataset,

Since good predictions (high probabilities) lead to good compression (few bits), this is akin to maximizing the joint probability of the sequence. Maximizing probability often involves minimizing the sum of negative log-probabilities (surprisal) for each step:

This concept is not new and appears in reinforcement learning, time series forecasting, online learning, and predictive coding. Notably, practical stream-based compression algorithms like gzip also adhere to this ordering constraint, typically because they process data sequentially with limited memory windows, building their models (like dictionaries or frequency tables) from past data to compress current data. This is also pretty much exactly how LLMs are trained.

So, ordering seems to be a very important part of the compression framing. But does it, in practice, significantly impact the overall compression of a dataset if the compressor can view the whole dataset at once?

Good compressors will leverage causal dependencies #

The Hutter Prize is a competition to compress a 1GB extract of English Wikipedia (enwik8) into as small a file as possible. The compressed file must be self-extracting, meaning the decompressor program's size is part of the total. This competition was motivated by the idea that "being able to compress well is closely related to acting intelligently.". The current record as of writing is around 110MB, a compression factor of >9.12 [2].

Notice that the primary objective here - minimizing

To understand this intuitively, we must think about the process that generated the dataset. In the case of language, this is generally a forward process: we write words in a sequence, and as a result, each word is to some extent causally dependent on the preceding words. While acausal relationships might exist (e.g., an author using foreshadowing), they are unlikely to be as strong for a typical compressor as the forward, causal ones.

So, if we attempt to compress a stream of data

We can say our goal is to reduce

To highlight this, we can run a simple experiment by reversing the bytes of a data stream and observing how that changes the compression rate. The hypothesis is that if the datastream is generated by a causally dependent process, it will not compress as well in reverse order when using a compressor that generally works by improving sequential prediction (even if it has access to the whole file).

Here are some results for the enwik8 dataset (100MB):

| Algorithm | Forward Size (bytes) | Reverse Size (bytes) |

|---|---|---|

| gzip | 36,518,329 | 36,608,138 |

| ppmd | 25,845,785 | 25,873,939 |

| zpaq | 35,691,746 | 35,910,432 |

All three algorithms here, even gzip, are better at compressing the data in its original forward order. The difference isn't vast for these general-purpose compressors (as they exploit many local patterns that might be somewhat direction-agnostic), but it's consistently better. If we were to implement our simple predictive coding scheme from earlier by training an LSTM to predict the next token, which is then fed into a variable-length encoding scheme - we would likely see more pronounced differences between forward and reverse ordering.

Compressing the model #

Coming back to our two parts to compression:

So far, we've talked about compressing a data stream

As highlighted by the Hutter Prize, the length (or complexity) of the decompressor (our model),

The value of this minimized sum is then considered the optimal compressed length of the data achievable with the class of models being considered. This captures the trade-off: a highly accurate but complex (long) model

The MDL principle provides a formal interpretation of Occam's Razor, asserting that the model achieving the shortest combined description length for both itself and the data (given itself) best captures the learnable regularities and patterns in the observations [3].

There's another reason to prefer small models though - we can expect small models to generalize better. This comes from the "counting argument" and ideas from Solomonoff's theory of universal induction. Assuming our model can be represented as a program, for any given length of program in bits,

Learning is a broad concept. However, if we assume that as agents in an environment we need to learn a model of the world, then in the context of building predictive models and understanding data, it would be fair to say that part of learning includes "the pursuit of the smallest most accurate model of our environment" - giving us some relationship between learning and compression.

Updating the model #

Francois Chollet's definition of intelligence, as outlined in his paper "On the Measure of Intelligence."

The intelligence of a system is a measure of its skill-acquisition efficiency over a scope of tasks, with respect to priors, experience, and generalization difficulty.

This definition highlights a particular aspect of intelligence that we haven't really discussed at length - how to build a model. At a high level, Chollet's definition is concerned with the efficiency with which a system can update its model (or acquire new skills), considering data efficiency and, notably, computational efficiency.

The reality for any real-world agent is that its environment is effectively a moving target, or at least, its understanding of it is constantly evolving. For any computationally bound agent, learning is an ongoing process. We can view this through a Bayesian lens, where at each time step, we not only need to make new predictions but also update our model. Schematically:

Whatever this update function is, it should be efficient in terms of computation and data. Francois Chollet's definition pulls our attention to the efficiency of this learning and adaptation process.

The AIXI formulation of universal intelligence #

AIXI is a theoretical mathematical framework for a universal artificial intelligence. It's fundamentally model-based, building on the ideas above, where we effectively place a reward-maximization objective on top of our modeling and compressive goals. It operates something like this:

- An intelligent agent takes actions in an environment to maximize reward.

- Having an accurate model of the environment is crucial for taking actions that maximize reward.

- The quality of the environment model is measured by its predictive power - essentially, how few additional bits are required to encode future states, given the current state and the model.

- The environment model is formed by preferring[4] simpler or shorter explanations (programs) for the observed history, as these are considered more likely to generalize to future, unknown situations.

Compression is again part of the story, but not the whole story. In the AIXI framework, model-building guided by compression principles like preferring simpler explanations is the mechanism through which an "intelligent" reward-maximizing agent can be constructed.

If you have a perfect model of the environment you could theoretically determine reward-maximizing actions by brute-forcing all possibilities and simulating their outcomes. In practice this is intractable for complex or probabilistic environments, and a great deal of effort goes into conducting that search efficiently.

The Free Energy Principle and active inference #

This is a very layman's take on the Free Energy Principle and active inference, glossing over a huge amount of detail and probably a bit of a stretch, but might give you some idea for how this things are possibly connected.

In the AIXI formulation of universal intelligence, compression is part of the story for modeling but not directly for action selection. However, perhaps there is a way to view intelligence more holistically through the lens of compression, thanks to the work of Karl Friston.

Friston's Free Energy Principle (FEP) roughly says that self-organizing systems (like living organisms or intelligent agents) strive to maintain their existence by minimizing the long-term average of surprise. Surprise is the negative log probability of an observation given an agent's internal model of the world:

Minimizing surprise means making sensations more predictable according to the agent's model.

The interesting aspect of FEP that might allow us to view intelligence largely as a compression problem relates to the two ways surprise can be reduced. There are at least two ways that we can reduce future surpise, and the second one is interesting:

- As discussed above, we can update our model to reduce surprise; this is called perceptual inference (or learning).

- We can perform actions that change our sensory inputs so they become more consistent with our model's predictions - this is the high-level idea of active inference.

Here's an intuitive take - why do you get out of bed in the morning? According to this framework, perhaps it's because you expected to be out of bed (based on your internal model of your routines and goals). It might be surprising if you were still in bed at midday if you are never normally in bed at that time. Why would you move out of the way of a car about to hit you? Hopefully, you have never been hit by a car, and to do so would be quite surprising. You know what standing safely feels like, and that's the sensation you expect to be experiencing as you walk along the road.

While AIXI separates its world-modeling (based on compression principles) from its reward-maximization for action selection, Friston's Free Energy Principle and active inference offer a potentially unifying perspective, suggesting that agents strive to minimize future surprise (or prediction error) not only by refining their internal models of the world, a process deeply linked to compression, but also by actively choosing actions that make their sensory experiences align with their predictions and preferred states (which are part of their generative model). In this view, action selection itself becomes part of the same surprise-minimization (and thus, compression-oriented) objective. If an agent can successfully make its world less surprising, its experiences become more 'compressible' by its internal model, which includes its goals.

This leads to the compelling, if ambitious, idea that intelligence, in its quest to understand and effectively navigate the world, might fundamentally be solving an ongoing compression problem.

Notes

- [0]: This inequality isn't universally guaranteed for all possible data

or all compressors. For instance, truly random (incompressible) data cannot be compressed. For very small , the overhead of the compressor itself might make . However, for meaningful, structured data that contains redundancies, good compressors readily achieve this. - [1]: The specific scheme shown below (

10for index 0,110for index 1, etc.) is a type of prefix code where1s might accumulate and0acts as a terminator. The key takeaway is the principle of using fewer bits for more probable symbols, which more advanced schemes achieve with greater efficiency. - [2]: The current Hutter Prize record achieves a compression ratio of over 9.12 for the 1GB enwik8 dataset. This means each original byte of data is represented by approximately

bits. This figure is remarkably close to some of Claude Shannon's lower estimates for the entropy of printed English (around 0.6 to 1.3 bits per character), suggesting these compressors are approaching fundamental limits of predictability in this large text corpus. - [3]: MDL doesn't guarantee finding the one "true" underlying model of a process. Rather, it identifies the model that provides the most statistically powerful inference and efficient summary based on the available data and the chosen class of models.

- [4]: To be more precise, AIXI, through Solomonoff induction, doesn't pick a single "simplest" model. It considers a weighted average (a Bayesian mixture) of all computable programs that explain the observed history. Simpler programs (those with shorter descriptions, i.e., lower Kolmogorov complexity) are given exponentially higher weights. This mixture is then used for prediction.