Exploring LLM performance on the ARC training dataset

2024-11-14 / 13 min read- Building the ARC explorer

- Understanding LLM performance

- Computable properties

- Solution descriptions

- Tagging

- Colors

- Initial analysis

- Overall LLM performance

- Grid sizes

- Tag accuracy

- Tag counts

- Description length

- Grid properties

- Next steps

TLDR: I tagged and described 200/400 ARC training dataset tasks, merged it with evaluation data for some LLMs, did some analysis on it, and put it all on a site so you can explore it.

ARC is a hard problem, and exploring the dataset first felt like the obvious starting point. The site originally started as a way for me to just explore and take notes on the problems, but that turned into completing a taxonomy of tags and descriptions and a few other things I got carried away with.

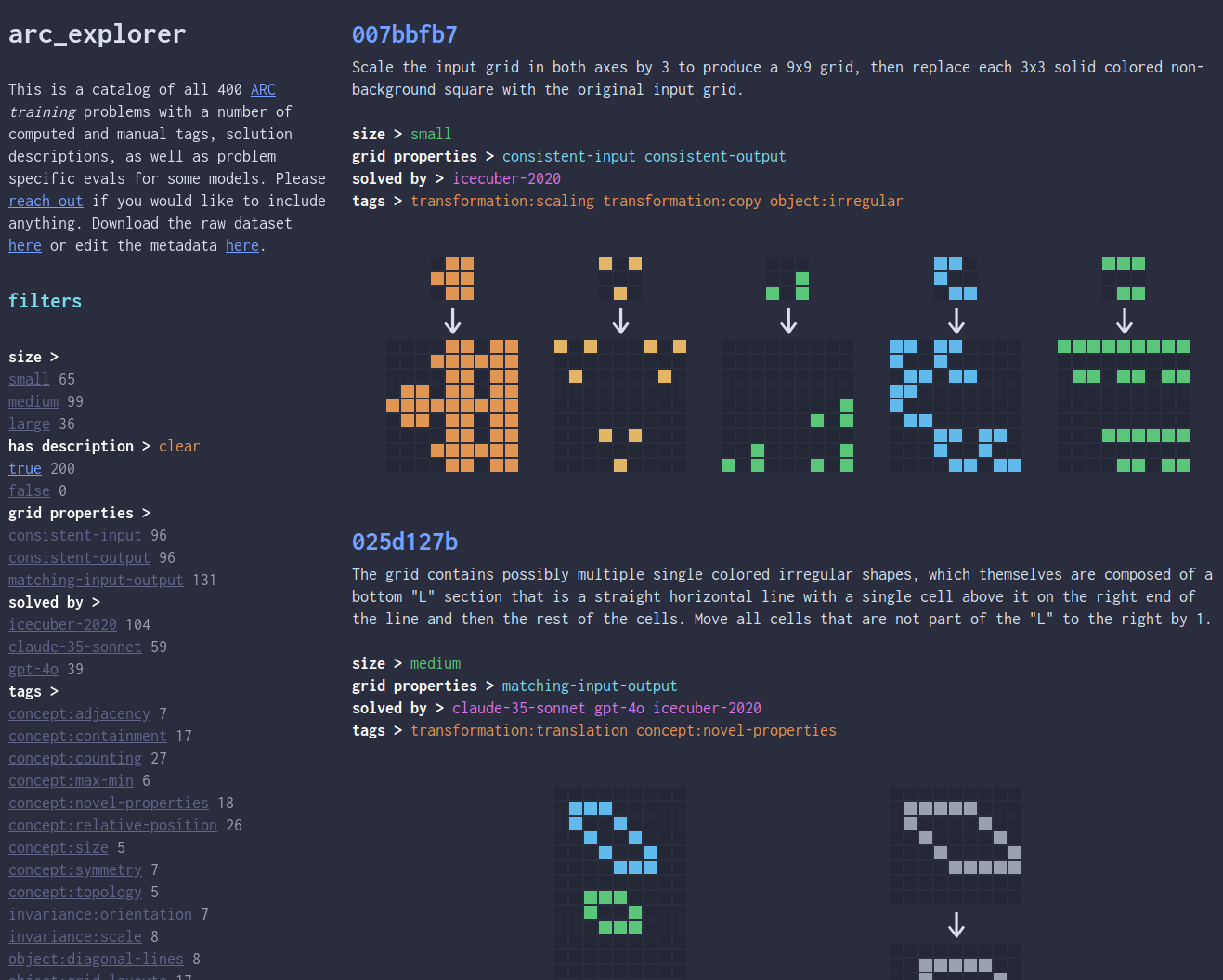

You can explore the tagged problems here yourself, download the raw JSON file, or read on if you want some more details on the dataset, how I went about this and some basic analysis on it.

If you are interested in helping to complete the tagging and descriptions for the remaining 200 problems, please reach out to me on twitter, or you can contibute directly to it here.

Building the ARC explorer #

Understanding LLM performance #

My main objective with this project was to understand where LLMs perform well [0] and where they perform poorly. Thankfully there are notebooks for GPT-4, Claude Sonnet, as well as some of the other public leaderboard submissions such as Icecuber 2020.

Sadly I don't have O1 access, so if anyone wants to help produce a submission.json file for O1, I would be very grateful, and bonus points if you can drop it in with the other submissions here.

Computable properties #

There are a number of computable properties that I could programmatically generate such as:

- The grid size - I broke this down into small, medium, large based on the average number of input sample cells, with boundaries of 64 and 256.

- Whether they have consistent input sizes, output sizes, or whether the input size always matches the output.

- Other properties are also calculated, like the number of distinct colors used, if the input colors match the output colors, etc. These are all in the raw JSON file, but not all of them are in the site itself.

Solution descriptions #

Ultimately there were only limited things I could easily compute, and I couldn't find a way to avoid [1] doing descriptions and tags manually.

The descriptions of the problems are trying to be a human like solution to the problem. This is how my brain worked, and not necessarily how a computer might solve them.

Coming up with ways to actually describe the solution in a concrete way was much harder than I had imagined, these are not things that one usually uses words to describe, and I suspect that many of my descriptions will be completely incomprehensible to someone who isn't me.

The complexity of the solution procedure varies quite significantly across problems, even on the training set which is supposed to be easier than evaluation or test, some of the problems require a very long sequence of steps to solve, here's an example description:

Identify the several disconnected, same colored objects that have cell values only on the edge of the outline of a perfect square (which may also be 1x1) and are symmetrical in both axes and may extend beyond the edge of the input grid. The output grid has the size of the largest such outline, and its background color is the same as the background (most common) color of the input grid. Each of the identified outline shapes is copied into the output grid and centered in the middle.

Or sometimes, they are much simpler:

Draw a red outline around any horizontally or vertically connected 2 green cells.

Tagging #

Whilst there are some problem types that recur several times, some of which are almost identical, that was usually not the case. There are still problems which I don't know how to tag properly.

I ended up with 37 tags, which evolved considerably as I went through. This took a few second passes, and then some grouping.

The full list I eventually come up with is below, within groups and with a short description of each both are certainly imperfect. I drew inspiration from David Bonin's Task Tagging Notebook on Kaggle, but ended up with a slightly smaller and more focused set.

| group | tag | description |

|---|---|---|

| objects | rectangle | requires identifying or producing rectangular objects |

| line | requires identifying or producing line objects | |

| outline | requires identifying or producing rectangular outlines | |

| irregular | requires identifying or producing irregular objects | |

| overlapping | requires recognizing objects that overlap but are distinct | |

| multicolor | requires identifying objects that are composed of multiple colors | |

| diagonal-lines | requires identifying or producing diagonal lines | |

| grid-layouts | requires recognizing that objects are laid out in some larger grid | |

| transformations | copy | copy one region to another |

| layering | requires understanding of in front or behind | |

| filling | requires filling some shape | |

| rotation | requires rotating a object | |

| flipping | requires flipping an object | |

| translation | requires translating/moving an object | |

| scaling | requires scaling an object | |

| recolor | requires changing the color of an object | |

| draw-lines | requires drawing a line | |

| procedures | search | requires identifying some specific cell or object with a certain property, sometimes multiple |

| agentic-program | can be solved with a program like solution that has conditional behaviours, e.g draw a line in a direction until some condition is met | |

| convolutional-program | can be solved by some fixed program that is convolved across the entire grid, e.g the same rule can be applied to each cell based on some fixed relative set of neighbors | |

| for-each | requires applying a procedure repeatedly multiple matching objects or cells | |

| alignment | requires aligning objects so that some cells or specific properties of it are aligned, e.g copying a pattern such that the blue cells overlap some other blue cells on the grid | |

| ordering | requires ordering some objects according to some property to produce the output | |

| invariances | scale | requires applying a rule at multiple scales |

| orientation | is invariant to the orientation of the grid | |

| concepts | size | requires understanding of sizes of objects, cell count, volume, width, or height |

| max-min | requires understanding or computing maximums and minimums of other properties | |

| topology | requires understanding of topology, this mainly covers toroidal shapes | |

| symmetry | requires understanding of symmetry, or where symmetry is used to solve the problem | |

| relative-position | requires understanding of the relative position of different objects, e.g left of, right of, above, below etc, or mapping a relative position between two different objects/grids | |

| containment | requires understanding the difference between inside and outside | |

| adjacency | requires understanding of if objects are adjacent or touching | |

| counting | requires counting some property usually part of an order or min/max, e.g number of cells of a color | |

| novel-properties | requires recognizing novel properties of objects that aren't covered by any other explictly tagged concept | |

| other | data-dependent-grid | the grid size is dependent on the input cell colors and layout |

| multi-sample-mapping | requires learning some arbitrary mapping between colors/shapes/sizes etc from multiple samples | |

| pattern-completion | determine what a pattern is and and complete it, e.g fill in the missing cells |

Colors #

The colors used in the images (and therefore also in some of the descriptions) are not the same as those on the ARC website. I used a different color palette for no particular reason, but now that the descriptions reference them, here is the full mapping:

| color | name | number |

|---|---|---|

| zero | 0 | |

| blue | 1 | |

| green | 2 | |

| red | 3 | |

| yellow | 4 | |

| purple | 5 | |

| cyan | 6 | |

| orange | 7 | |

| gray | 8 | |

| teal | 9 |

Initial analysis #

There are probably limited, non-obvious insights to draw out here from the questions I've asked so far. Bigger grids, grids with more tags, and longer descriptions are all harder for the models to solve, unsurprisingly.

The tags have the potential to be an interesting signal, although I believe this would greatly benefit from some additional data and tagging the remaining 200 problems to reduce the uncertainty a little bit.

Overall LLM performance #

The performance of the 3 evaluated models here is much better than their scores on the public eval leaderboard, and that isn't a surprise as the training set is acknowledged to be much easier than the evaluation set. Otherwise the relative performance is in-line, which is a reasonable assurance that nothing went horribly wrong here.

| solver | accuracy |

|---|---|

| gpt-4o | 80/400 (20.0%) |

| claude-35-sonnet | 121/400 (30.25%) |

| icecuber-2020 | 201/400 (50.25%) |

Grid sizes #

For all 3 models, the bigger the grid, the worse the performance. gpt-4o seems particarly affected by the input grid size, with a 7.937% accuracy on large (> 256 cell) grids, compared to 39.683% on small (< 64 cell) grids. On the small grids, gpt-4o is not far off sonnet at all. icecuber-2020 is the least affected by grid size, but there is still an effect.

It's unlikely it's the grid size itself that is the problem, but rather the fact that more complex problems inevitably have to be put on larger grids, so I'm intepreting this as a proxy for problem complexity.

| solver | large | medium | small |

|---|---|---|---|

| gpt-4o | 5/63 (7.937%) | 25/210 (11.905%) | 50/127 (39.37%) |

| claude-35-sonnet | 12/63 (19.048%) | 51/210 (24.286%) | 58/127 (45.669%) |

| icecuber-2020 | 25/63 (39.683%) | 94/210 (44.762%) | 82/127 (64.567%) |

Tag accuracy #

The tags are quite sparse, and the margin of error on these numbers is pretty high so take it with a pinch of salt. I've only included tags with at least 5 problems. The accuracy numbers are based on whether either gpt-4o or sonnet correctly solved the problem, which has a baseline accuracy across all problems of 32.75%.

I won't draw any conclusions from this. As you would expect (and I hoped), some of these tags seem to impact performance quite a lot. But, it's hard to tease out what is that tag itself versus some other correlations with the presence of that tag.

| tag | accuracy (gpt4o|sonnet) |

|---|---|

| object:overlapping | 5/9 (55.556%) |

| other:pattern-completion | 9/17 (52.941%) |

| transformation:flipping | 8/18 (44.444%) |

| procedure:convolutional-program | 11/25 (44.0%) |

| transformation:recolor | 10/27 (37.037%) |

| object:grid-layouts | 6/17 (35.294%) |

| object:rectangle | 15/43 (34.884%) |

| other:multi-sample-mapping | 3/9 (33.333%) |

| concept:max-min | 2/6 (33.333%) |

| concept:relative-position | 8/26 (30.769%) |

| other:data-dependent-grid | 5/17 (29.412%) |

| transformation:copy | 12/42 (28.571%) |

| concept:counting | 7/27 (25.926%) |

| object:diagonal-lines | 2/8 (25.0%) |

| object:outline | 5/20 (25.0%) |

| object:line | 9/37 (24.324%) |

| concept:containment | 4/17 (23.529%) |

| transformation:translation | 3/14 (21.429%) |

| procedure:agentic-program | 7/35 (20.0%) |

| transformation:draw-lines | 5/26 (19.231%) |

| procedure:search | 6/35 (17.143%) |

| procedure:for-each | 3/18 (16.667%) |

| concept:novel-properties | 3/18 (16.667%) |

| object:multicolor | 5/30 (16.667%) |

| concept:symmetry | 1/7 (14.286%) |

| transformation:scaling | 2/15 (13.333%) |

| object:irregular | 6/46 (13.043%) |

| invariance:scale | 1/8 (12.5%) |

| procedure:alignment | 1/9 (11.111%) |

| transformation:rotation | 1/9 (11.111%) |

| transformation:layering | 2/18 (11.111%) |

| invariance:orientation | 0/7 (0.0%) |

| concept:adjacency | 0/7 (0.0%) |

Tag counts #

More tags generally means there is a bit more going on in the problem, requiring multiple concepts or processes to be composed in some way. This seems to turn out to be a pretty good signal of overall accuracy with the accuracy generally trending much lower for those problems with more tags, while the single tag problems are solved at a rate of 54.167% which is much higher than the baseline.

| # tags | accuracy (gpt4o|sonnet) |

|---|---|

| 1 | 13/24 (54.167%) |

| 2 | 19/45 (42.222%) |

| 3 | 16/42 (38.095%) |

| 4 | 7/41 (17.073%) |

| 5 | 4/23 (17.391%) |

| 6 | 3/12 (25.0%) |

| 7 | 1/9 (11.111%) |

Description length #

Short descriptions are solved 52.308% of the time, compared to 21.739% for long descriptions. Interestingly, medium or long descriptions don't have much of a difference, which perhaps says something about the quality of my descriptions.

| description length | accuracy (gpt4o|sonnet) |

|---|---|

| short | 34/65 (52.308%) |

| long | 15/69 (21.739%) |

| medium | 14/66 (21.212%) |

Grid properties #

Well it turns out these don't have much variance in between them at all, but as they are on the site, I'm including them for completeness.

| grid properties | accuracy (gpt4o|sonnet) |

|---|---|

| non-matching-input-output-grid | 48/138 (34.783%) |

| inconsistent-input-grid | 64/190 (33.684%) |

| consistent-output-grid | 70/210 (33.333%) |

| data-independent-output | 126/383 (32.898%) |

| inconsistent-output-grid | 61/190 (32.105%) |

| consistent-input-grid | 67/210 (31.905%) |

| matching-input-output-grid | 83/262 (31.679%) |

| data-dependent-output | 5/17 (29.412%) |

Next steps #

The obvious question to ask is - what happens when you feed these descriptions and tags into an LLM as additional context for solving the problems. I'm not hopeful that they will change much, but it's not too hard to ask the question (update: I did this, it brings Claude performance up to 35.25% from 30.25% when testing against the 200 described tasks).

I'd like to complete the tagging process, and extend to the evaluation dataset once ARC 2025 is released when I find the time, although I'd estimate this is around 10 hours or fairly gruelling effort.

There are many more questions I'd like to answer from the data. E.g looking at compressibility of the grids, or the conditional compressibility (e.g len(zip(input, output)) / len(zip(input)) or something like that). Hopefully others would be interested in taking their own analysis further too.

Getting a few more models in here would be nice, again with the obvious caveats of it being a public dataset.

And finally, as others have tried, seeing how models fare at trying to predict the tags themselves.

And of course, trying to take a shot at ARC itself...

Notes

- [0]: There are obvious problems with scoring these models against the training (or any public dataset).

- [1]: I did initially explore a suggestion from the community to try to use an LLM to describe solutions based off of re-arc verifiers, but this wasn't very successful, and after looking at the code for the verifiers, it was not surprising that it didn't work.